The Conference

On June 23rd, I was delighted to speak for the 3rd year at the Looking Ahead 2016 summit. I’ve talked about how much I love my job before and I can say that our summit reinforces that for me every single year. I leave feeling energized as we take risk after risk every year and try to show customers where we are heading and how we can improve their lives.

My Session: Putting Your Cloud on Autopilot

First of all, I would absolutely love feedback on the session, so please send me an e-mail or tweet me. I really appreciate it.

Approaching this years session, it was clear to me so many of the customers I deal with on a day to day basis have moved beyond what I often call the “plumbing phase” of Cloud. I decided to reinforce the message around Cloud by starting off with what it means to me and the Ahead team in general. I am fairly sure every session I do on Cloud for the rest of my life will start off with 60 seconds of what we exactly mean by it; given how misused the term is in the industry.

Deployment Models

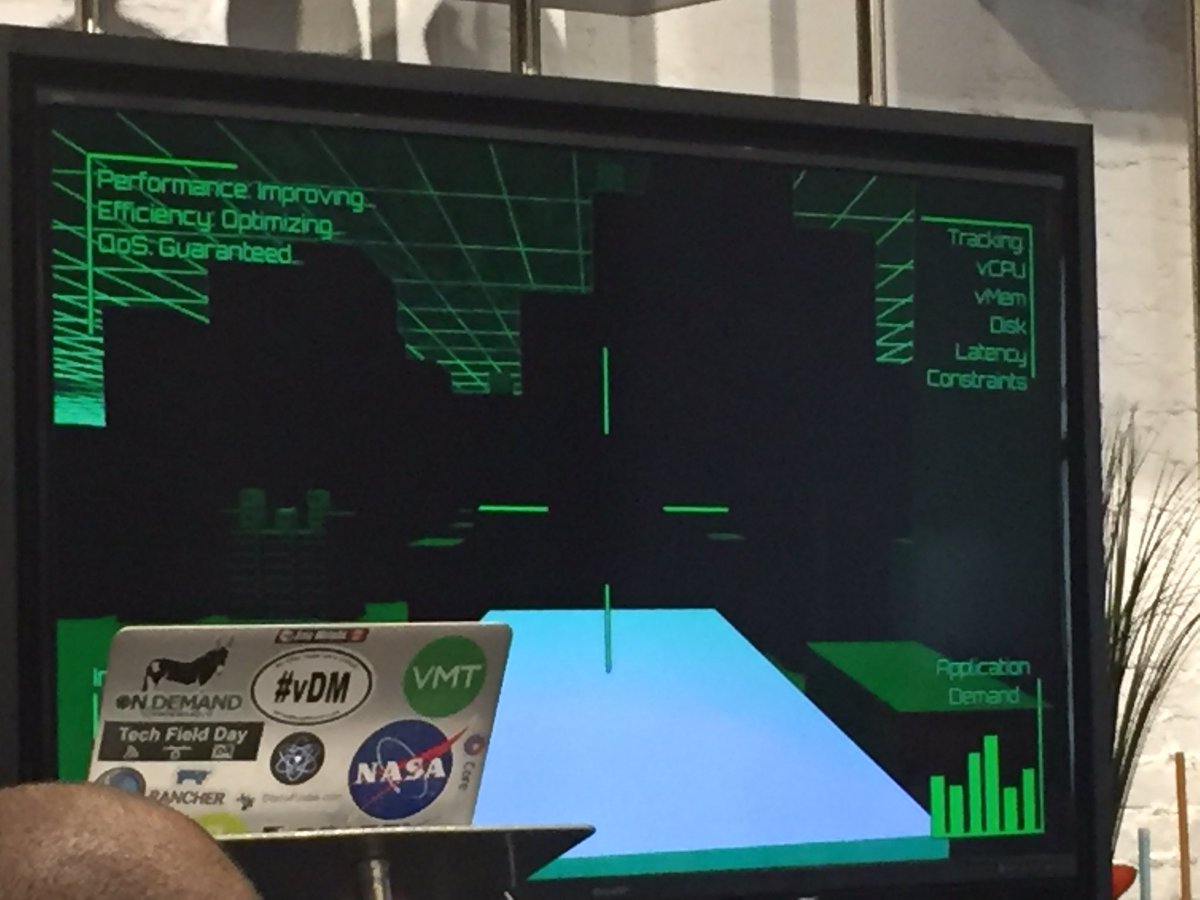

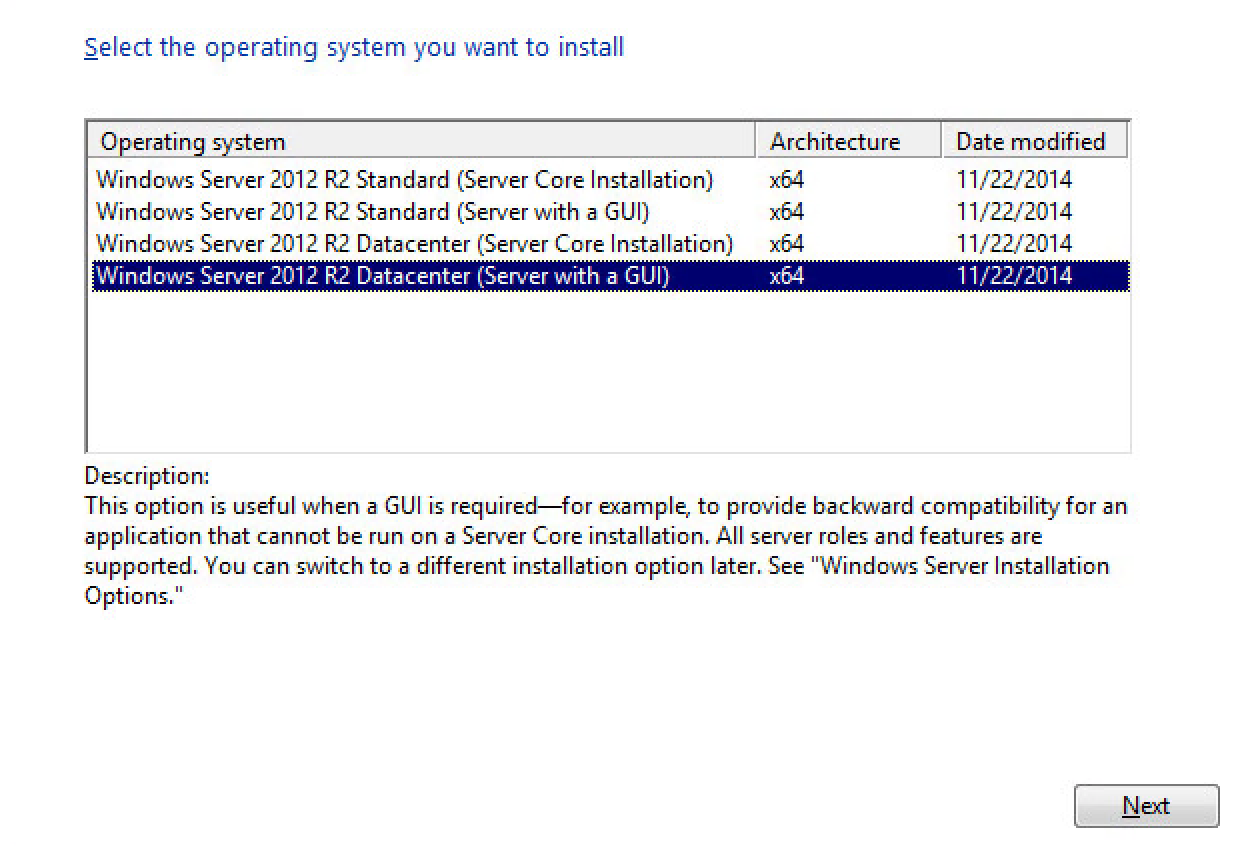

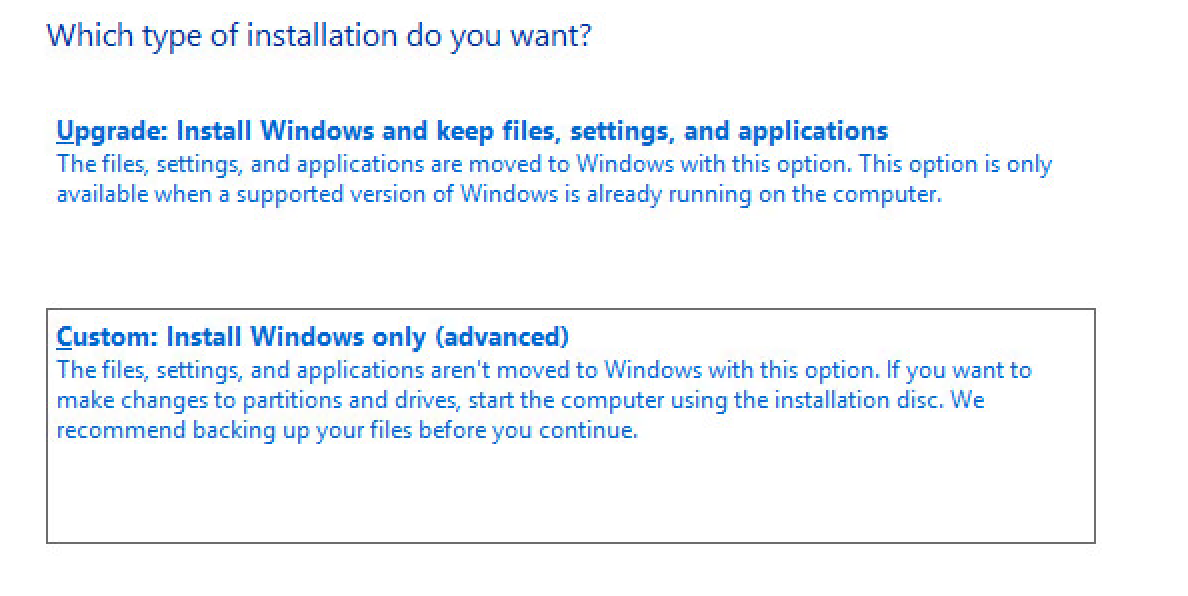

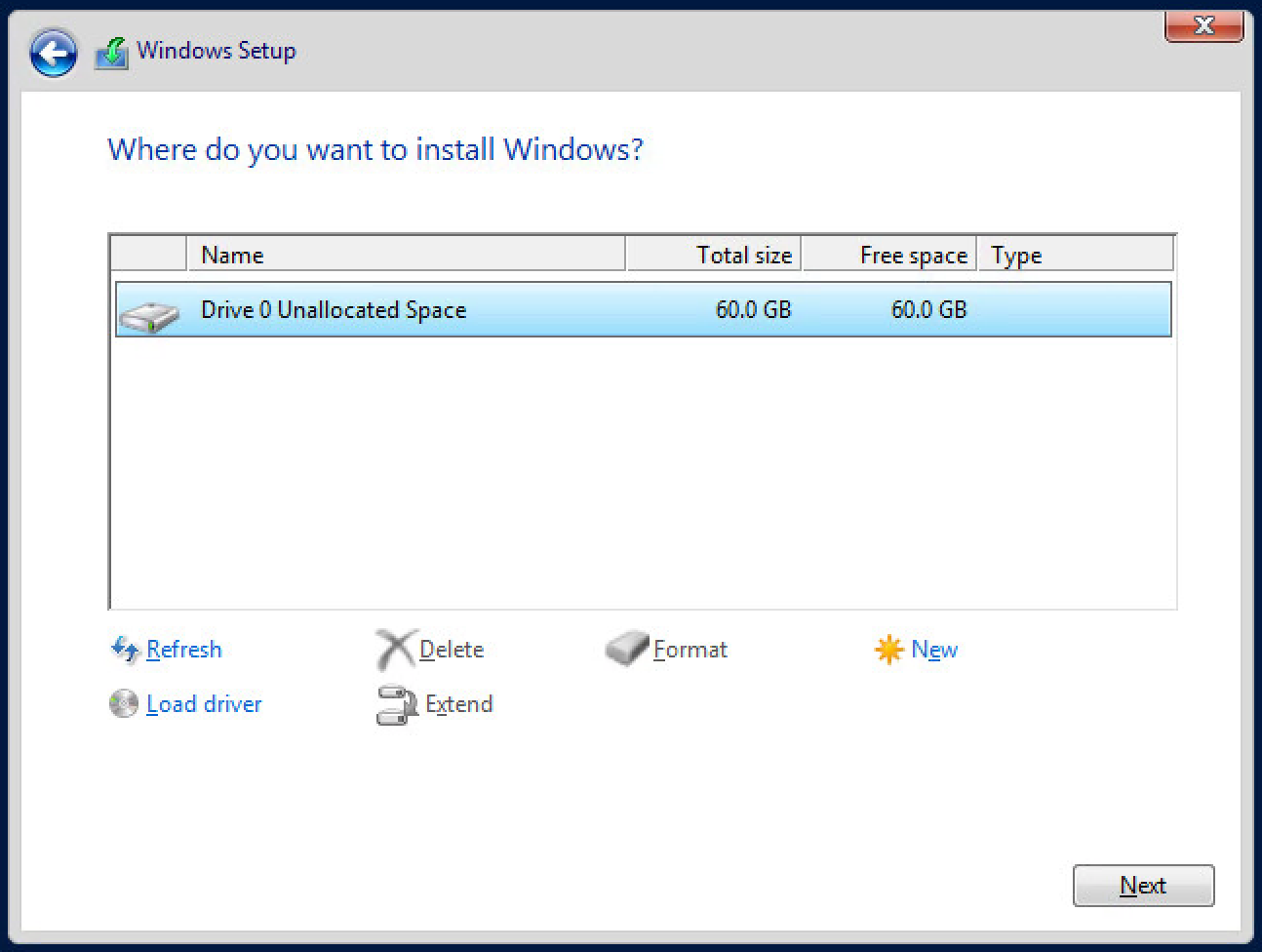

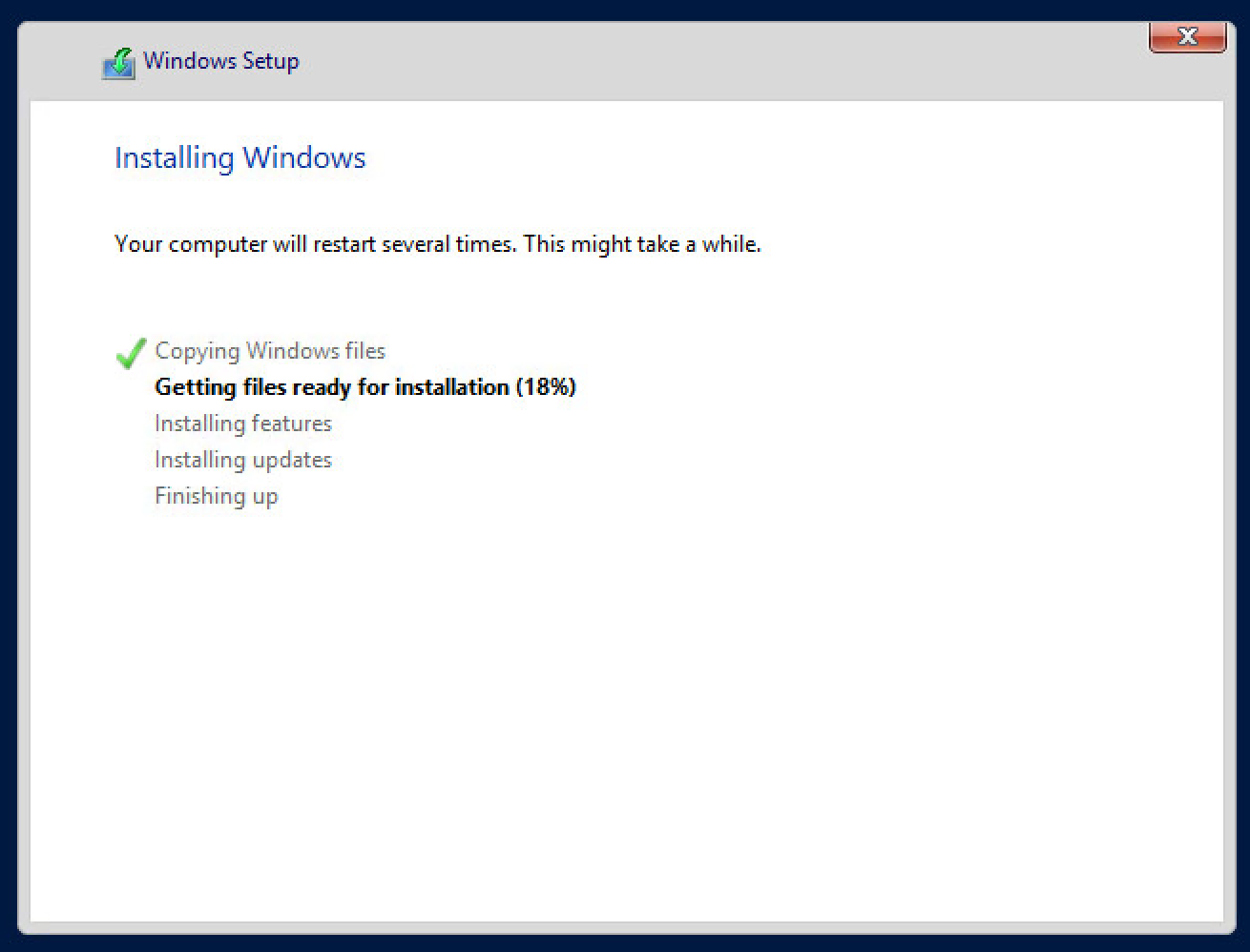

Once we got over the basics of doing Infrastructure as a Service, it was time to move onto newer items. In the past I’ve talked a lot about Self Healing Datacenter and how to actually make that a reality, but this time I wanted to focus on the different ways Automation can help across On-Premises and the Public Cloud.

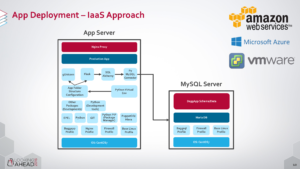

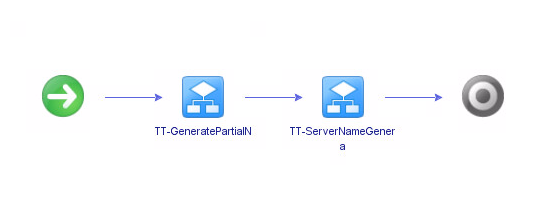

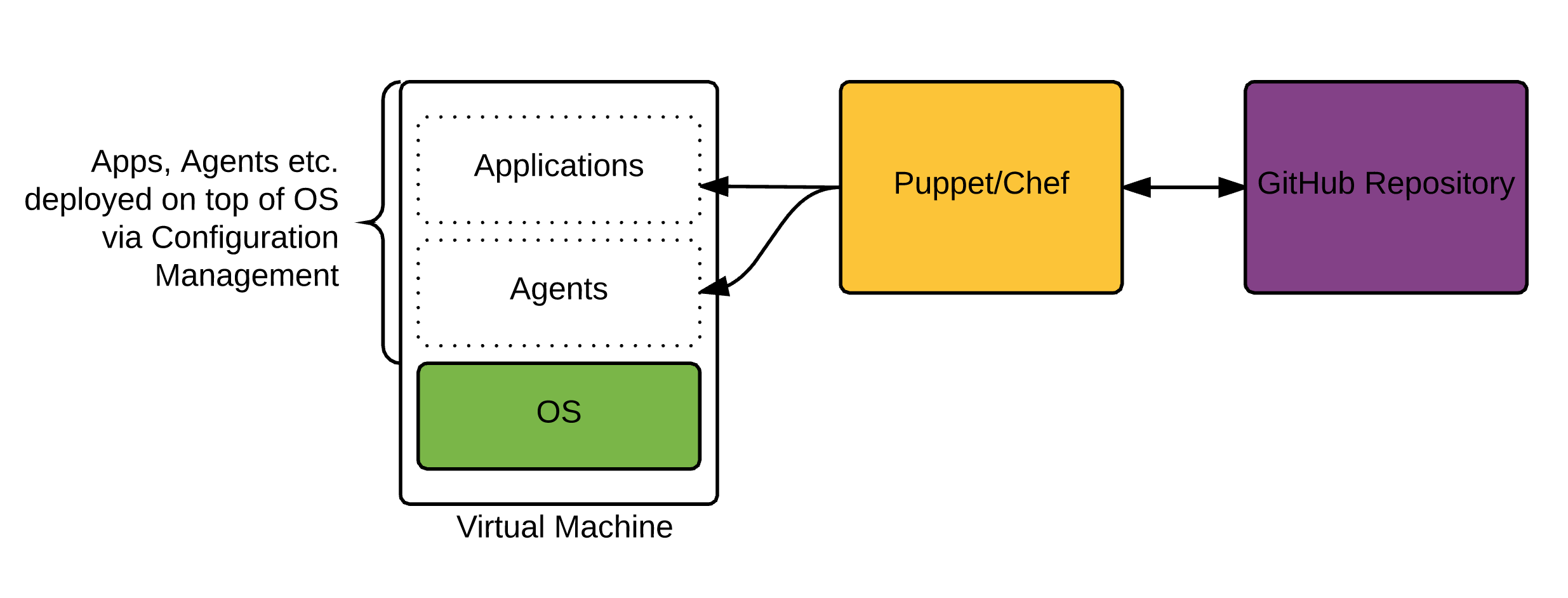

Essentially going from the IaaS Approach via Puppet…

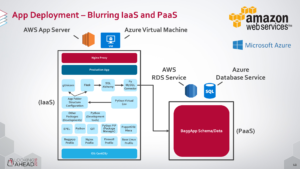

To a partial refactor using AWS RDS…

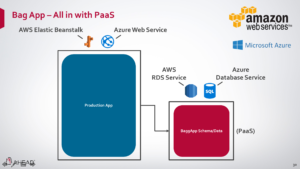

To a complete PaaS deployment…

All using the same application. I completed a demo showing this, as well as the various ways AWS failover works. The main point here is to stress the choice and flexibility you give up by embracing the various deployment models. I remember saying a few years ago “No 2 clouds are the same”, and that seems to have taken off. I think it’s still valid, at least for now.

Self Healing

Then it was time to get back onto the Autopilot theme again, this time using a Google Car to illustrate the mechanisms we use in the real world to create safety. Relating it back to Cloud, I explained an example of event management using AWS Lambda and ServiceNow. I took an AWS Lambda function and used it to connected to ServiceNow so as nodes spun up or spun down ServiceNow Change records would be created automatically. I’ve got a post brewing on the benefits of Orchestration and Event Driven Automation which I hope to finish up some time. I think this is a key topic, often overlooked these days and something I’ve been discussing heavily with our team at Ahead.

Finally – The Cloud Experience

If there’s one thing I get fed up with at VMUGs and other user groups, it’s people standing up and saying you need to program and that’s the skill. While important, I feel like many just state the obvious in career development without truly explaining what it means to have a functioning Cloud and how you get to that, across On-Premises and in the Datacenter.

Nick Rodriguez and I came up with a new term which we call #CloudLife (Also a future blog post). How do you create the awesome experience that truly changes behaviours in an orgnaization? I talk also with my colleague, Dave Janusz, on this topic alone at length. How do you make someone do something in your IT environment without having to tell them? I love asking this question as it creates all sorts of interesting ideas for design best practices. I’m going to write more on this topic soon also but I hope people start to realize the most successful clouds are the ones that create a user experience that works. I read a book during my University days when I studied a module on Human Computer Interaction. I still state to this day, that the book I read combined with the module taught me some of the most important lessons in IT.

If you haven’t got it, check it out below. It’s a fun read and not entirely related to IT, but I loved it:

Remember, programming is important, but it’s not the only major skill.

With that, I’m going to end this post. I hope to finally sit down soon and write 3 posts I’ve been thinking and talking about for a while…

- What is #CloudLife?

- Skills of Successful Cloud Deployments

- Visual Orchestration vs Non-Visual Orchestration

These topics deserve more debate than they get today. I feel like the DevOps initiatives when done as a Silo (yup you heard me, people do DevOps in a silo that they call DevOps), have masked some of the changes IT has to make. Also IT hasn’t always been able to articulate and truly create the services Developers always needPublic Cloud is here, but there’s more to wrap around it. Do Developers use visual studio and connect directly to Azure? Do they use Docker + IaaS for more flexibility? How do you present the right services and lego bricks of automation?

Time to dream more about….#CloudLife